Best scraper tools. Comparison of the most popular web scrapers

Scraper is a tool to extract data from websites. Web scraper extract the necessary information and save it in a local file on your computer or transferred via API. Scrapers can be used for various purposes: collecting information to fill the site with content, searching for vacancies or candidates, searching for real estate, collecting data for market analysis, analysis of articles, marketing research, etc.

As there are different scraper for a web of the websites, there is a problem: which scrapers are best used for your purposes? The majority of web scrapers are universal, simple in use. Some scrapers (parser) have a free trial period, and for some it is necessary to pay just to test it. Scrapers can require a lot of settings and time, and some scrapers are simple and easy to use and do not take much time.

To find out which scraper is more convenient for your purposes and not to spend a lot of time studying each scraper, we studied the most popular scrapers and brought all the main characteristics in the table for comparison.

This spreadsheet shows the characteristics of the best scrapers:

Import.io

It is a web scraping platform which facilitates the conversion of semi-structured information in web pages into structured data, which can be used for anything from driving business decisions to integration with apps and other platforms.

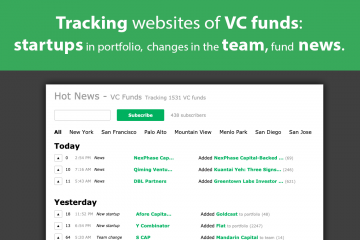

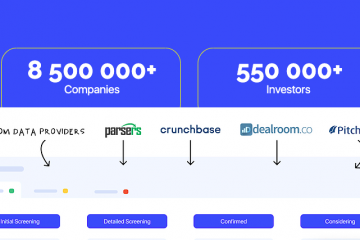

Parsers.me

Parsers (scraper) is an extension for extracting data from websites. Working with scraper Parsers, you select the necessary elements on the site page and, with the help of xpath, the extension passes the address of the value that you need to the server. Next, a special program analyzes the site and finds pages of the same type. From these pages, the program retrieves the information you previously marked and writes to the file. After processing the required number of pages on the site, you get the values in a separate file that you can download. With this scraper extension it is very easy to work. You just need to specify the name and select a value on any product card. All extracted data is expanded into xls, xlsx, csv, json, xml file.

DataMiner Scraper

DataMiner Scraper is a data extraction tool that lets you scrape any HTML web page. You can extract tables and lists from any page and upload them to Google Sheets or Microsoft Excel.

Octoparse

Octoparse is the perfect tool for people who want to scrape websites without learning to code. It includes a point and click interface, allowing users to scrape behind login forms, fill in forms, input search terms, scroll through infinite scroll, render javascript, and more.

Parsehub

ParseHub is built to crawl single and multiple websites with support for JavaScript, AJAX, sessions, cookies and redirects. The application uses machine learning technology to recognize the most complicated documents on the web and generates the output file based on the required data format.

Dexi

Dexi supports data collection from any website and requires no download just like Webhose. It provides a browser-based editor to set up crawlers and extract data in real-time. You can save the collected data on cloud platforms like Google Drive and Box.net or export as CSV or JSON.

Mozenda

Mozenda is a cloud web scraping service (SaaS) with useful utility features for data extraction. There’re two parts of Mozenda’s scraper software: Mozenda Web Console and Agent Builder. Mozenda Web Console is a web-based application that allows you to run your Agents (scrape projects), view and organize your results, and export or publish the extracted data to cloud storage such as Dropbox, Amazon and Microsoft Azure. Agent Builder is a Windows application used to build your data project.

80legs

80legs is a powerful yet flexible data scraping tool that can be configured based on customized requirements. It supports fetching huge amounts of data along with the option to download the extracted data instantly.

Scrapinghub

Scrapinghub is the developer-focused web scraping platform to offer several useful services to extract structured information from the Internet. Scrapinghub has four major tools — Scrapy Cloud, Portia, Crawlera, and Splash. Scrapy Cloud allows you to automate and visualize your Scrapy (a open-source data extraction framework) web spider’s activities.

Webhose

Webhose provides direct access to real-time and structured data from crawling thousands of online sources. The web scraper supports extracting web data in more than 240 languages and saving the output data in various formats including XML, JSON and RSS.

Content Grabber

Content Grabber is a powerful multi-featured visual web scraping tool used for content extraction from the web. It is more suitable for people with advanced programming skills, since it offers many powerful scripting editing, debugging interfaces for people in need. Users are allowed to use C# or VB.NET to debug or write script to control the crawling process programmatically.