How does the Parsers help optimize your work?

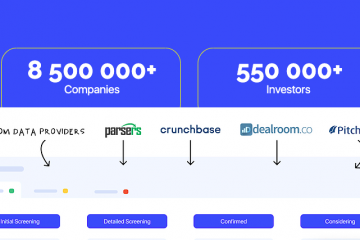

Scraping (parsing) – parse of the pages this a web and extraction of data from the websites.

The parser (grabber, web extraction of data, web scraper) derives a large number of data and saves them in the local file on your computer. The scraper for web sites is the effective tool for data analysis from all web site for a small amount of time.

Operation of a parser is similar to operation of search engines. Search engines on requests analyze contents of the website and give result in the browser. The parser by separate criteria (the given fields necessary for you) also analyzes contents of the website and derives information in the file on your computer. The web scraper bypasses thousands of pages the web site within a short period of time and filters provided data. Further the parser selects data on the criteria set by you and exports these data to one file for the subsequent processing.

The parser has a number of advantages when working with arrays of data:

- High processing rate

- Analysis of a huge number of information

- Automated process of selection (precisely selects and separates the necessary data)

But there is also a shortcoming – absence of unique content that negatively is reflected in SEO.

Why the parcer is necessary:

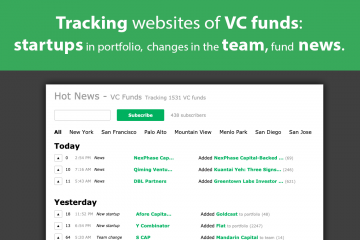

- For maintenance of actuality of information.

For example, information about the courses of currencies or about the results of sport matches. Such data is not convenient to change manually because of the need for daily attention to the web resource. To ensure that the site has the most up-to-date information and did not have to devote a lot of time to self-updating, the best solution is to use the web scraper (parser) .

- For auto update of pages.

For example, you have an information website, but time for its up-dating does not suffice. For example, the user visited your site and after a while having returned on it did not see any updates. Such website will seem to it uninteresting. As the total – visits of your website will be reduced. With the solution of this problem you will be helped by automatic adding of news, new articles on different subjects and other information from resources with similar subject in case of the help of a web scraper (parser).

- Fast filling of the website necessary information.

You have an e-commerce shop. The site is ready, but it does not have product cards. Usually content managers engage in filling of web site. They do it by hand and it occupies plenty of time. Adding plenty of cards of commodities for short time is possible through the script of parser or website scraper (parser).

- Integration (combining, centralization) of information.

The Internet contains a huge number of information. Some information contains the general subject but is on different web sites. Using the site content parser, you can place all the necessary information on one page. For example, news. The user will be much more comfortable on the site, where on one page are collected articles from different Internet resources about the news that interests him.

You can try to scrape any site and make sure that this is an excellent tool for your work.

Examples of using the parser:

There are a lot of examples of using the parser. Here are some of them:

- Travel companies – update information about places of rest, living conditions, weather, operating modes of museums.

- Online stores – description of goods, monitoring of competitors’ prices.

- News Internet resources — collection of fresh articles from different platforms.

- Extracting data on hotels and airline data to create the most convenient tourist routes.

- The analysis of public opinion – collection of reviews about a company from different sources

- Organization of events – collecting data on thousands of events to create an application.

- Staff recruitment, job search – downloading of the abstract or vacancies from different sources.

- Information up-dating – check of updates of products, appearance of new.