What is a website scraper or parser?

Scraper (parser) is a software for collecting data and converting it into a structured format, most often working with a text.

Suppose you need to place a thousand cards of products in your online store. You need to collect a lot of information, process, rewrite and fill out the cards.

Scraper scans Web pages on the Internet, the results of the search engines, and copies the same type (text or images) or universal (text and pictures) information. They allow you to recognize huge amounts of continuously updated values.

So, let’s take a closer look at what a site scraper is and how it helps to process big data.

The program operates according to a given algorithm and compares certain expressions with those found on the Internet. It consists of characters and specifies the search rule.

In this case, the software in question has various presentation formats, design styles, access options, languages, markup methods and are configured to fully or partially copy the content of the selected web resource.

Scrapers sites perform work in several stages

- Search for the necessary information in its original form: access to the code of the Internet resource, downloading.

- Extract values from the code of a web page, while separating the required material from the code of the page.

- Forming a report according to the requirements that were set (writing information directly to databases, text files).

Scrapers sites are a number of definite advantages when working with big data:

- High processing speed (per minute a few hundred / thousand pages)

- Analysis of large amounts of data

- Automation of the selection process (precisely selects and separates the necessary information)

When you use the scraper information from the site

We’ll look at how the process of scraping an array of data and extracting the necessary information from it is used in practice.

- Filling up Internet shops.

For filling with content that contains the same type of product descriptions and technical characteristics that are not intellectual property: price, model, color, size, pictures. The collection program runs frequently and automatically parses the content to update the database.

- Tracking ads.

Distributed among real estate brokers, car dealers, resale in other areas. It can be scrape a photo from a website or text.

- Receiving content from other sites.

This is the most popular type of use of the type of software considered for filling the site with content.

As examples of site scrapers using this type of data collection are:

- Travel companies – update information about places of rest, living conditions, weather, operating modes of museums.

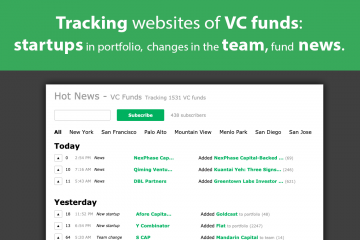

- News Internet resources – collection of “hot” information from certain sites.

- Updating of “constant” information. It is not launched often, mainly just to check for new products on certain sites of the worldwide network.

- Collect information from social networks: from social networks to a web page, from one social network to another, from one community to another.

- Automatically collects contact information on the list of accounts VKontakte and saves them in any convenient format. The volume and composition of the collected materials depends on the settings for the privacy of accounts.

- Collecting ID of active members of groups – to subsequently offer them to advertise, for a reward, an Internet site. Allows you to automatically evaluate the audience of each active subscriber – track when the person was last on the social network.

Scraping in search marketing

Required for:

- Extract contact information.

Used when creating a database of potential customers, for the purpose of subsequent advertising and directed to the collection of email addresses.

- Search by own database.

Scraper site structure allows you to find the necessary content from the database of your own web resource. In doing so, it looks for no external links, but the occurrence of a search query that the user has driven.

- Collecting SEO links by specialists.

SEO specialists use links from the site to evaluate their number, what resources they refer to, and remove unnecessary ones.

When you have to deal with several hundred links, the scraper becomes the best optimizer tool. It allows you to collect all the information about the links, to copy it in a convenient form.

Another way to use optimization is to create a site map. There are many links, manually collecting the file for a long time. In this case, the software checks all the internal links on the right site. You select only the final file type.

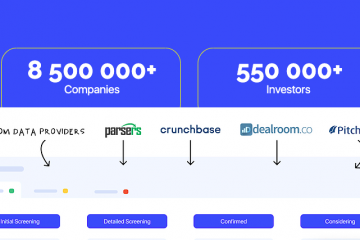

Simplify life where possible. You can download the scrapers of sites and try it right now for free Parsers