What is the web scraper for?

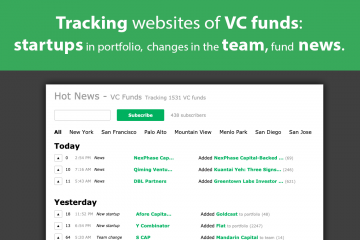

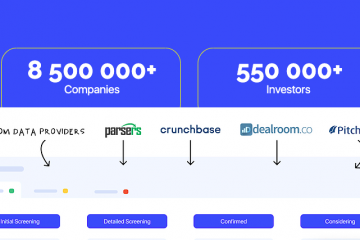

Today, information is updated very quickly. It is difficult to handle manually, and it takes a lot of time, you can miss important things. Therefore, special programs have been created – web scrapers that automatically analyze and collect the data of interest. They handle huge amounts of continuously updated values.

What is a web scraper?

A web scraper is a program or search engine (parser, web grabber or script) that analyzes the information of the pages of Internet sites. It organizes data collection and structures it. The web scraper parses text information using a mathematical model.

Similarly, you can describe the action of a person when reading words. He also performs syntactic analysis, that is, comparing the words read with those in his vocabulary or formal grammar.

Such programs are widely used. They differ in the purpose of work, but the principle of work is the same. The collection of information is carried out on a given basis. The result is data that is used for its intended purpose.

What is used for?

Collecting and analyzing information on the Internet takes a lot of time, effort and resources. The automated parser program copes with such a task faster and easier. Within 24 hours, it is able to browse through a huge portion of web content on the Web in search of the necessary data and analyze it.

This is what robots are doing-search engines, programs to check for uniqueness, in a high-speed mode, analyzing hundreds of web pages containing similar text.

Accordingly, with the help of the web scraper program, you can find content to fill your own website.

It is possible to web scraping the following content:

- lists of products, their properties, photos and descriptions, texts;

- web pages with errors (e.g. 404, missing title);

- cost of goods from competitors;

- user activity level (likes, comments, reposts);

- potential audience for advertising and promotion of goods and services.

The web scraper is used by the owners of online stores to parse the content to fill the product cards. Product card descriptions are not intellectual property, but their creation takes a lot of time and effort.

The web scraper allows you to solve the following tasks:

- Ability to parse content in large volume. The growth of competition requires processing and posting a huge amount of information on their web resources. Manually master such a scale is no longer possible.

- Constant content updates. One person or even a whole team of operators can not serve a large flow of information that is constantly changing. Data change occurs every minute, so it is impossible to do it manually.

Using the web scraper is a modern and effective way to parse content in an automated mode with constant updating.

The advantages of using a web scraper are:

- Speed of operation. Bypasses hundreds of web resources in seconds.

- Accuracy. Systematizes information into technical and “human”.

- Inerrancy. The script selects only what you need.

- Efficiency. The web scraper converts the received data into any kind.