Why has web scraping become popular?

At present, companies of any branch have a strong dependence on the data collected in the market, their competitors, the global industry, statistics, and a large number and indicators that are vital for survival.

The availability of data is everywhere, with the Internet being the primary source for many companies and industries, but until recently, obtaining such data was done manually, which required time and money.

Although a workforce was prepared for this collection, and companies were formed around obtaining data of interest while this process was done manually, not only was it slower and more expensive, but there were also many errors.

The participation of data analysts was subject to human errors, which represented a risk for those who use the data because these errors could have negative consequences; even so, the data remained necessary and useful.

With the development of technology for automatic data collection, both time and cost decreased considerably, and so did mistakes, tools have been created, and resources found that allow obtaining accurate and relevant data in a fraction of the time it took to do so some time ago.

This process is known as web scraping and has multiple applications in modern companies.

How does web scraping work?

Its operation is a very simple situation, with specific tools and search criteria “sweeps” are made on the internet to find the required data, and then these are stored in one place, pending the use that the company concerned will give it. This process, unlike the manual, ensures quality and accurate data on a larger scale and in a shorter time with positive repercussions in the final analysis of the data.

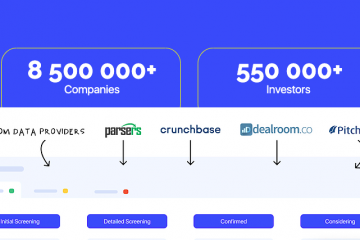

As these are automated processes, it covers a greater volume of information and therefore collects a greater amount of better quality data for the different uses that are given to data in companies, volume, and precision are required, and this automation allows it.

The decrease in costs will always be beneficial for companies and users who get better results with less effort and lower cost. Some companies that use the web scraper show improvement in their internal processes.

The advantages of web scraper are many among them; it highlights that some companies that could not afford to extract data lost interesting studies of many aspects of their operation, and while using this technology, they can study many variables both internal and external to improve its quality and market presence.

Uses of web scraper

The data obtained with the web scraper allow companies to use multiple uses, including research of all kinds such as:

- Know the demand according to your geographical location

- Make a detailed analysis of the products or services offered by its main competitors or at least one of them

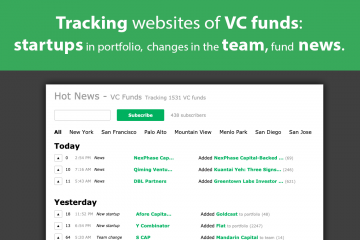

- Monitor the changes that occur on the pages or websites of the competition

- Know the response of customers to the product or service offered

- Analyze the areas of the company that can be improved

- Get a more detailed view of the market

These are just some of the uses that can be given to the data obtained with the web scraper.

What benefits and advantages does web scrapers bring to companies?

These technological tools are very useful for many companies and industries since large management and strategic decisions can depend on proper data management, especially if business growth is planned, having reliable and constantly updated databases can make a difference between success and failure.

A company with updated data and the ability to analyze it has, in its possession, a very powerful tool that marks a competitive advantage capable of differentiating it from its environment of direct competitors and in the market in general.

Companies of all kinds are generating and using data at all times and extracting data reliably and quickly keeps them alert to market changes to respond appropriately and on time.

Some areas where web scraping can be useful

The analysis and visualization of data, the amount of data that is constantly generated can be overwhelming, but if there is a mechanism to extract data the task is reduced to the analysis of this data, and sometimes according to the criteria for Data extraction part of that analysis is also advanced.

These data and their analysis become inputs for decision making in the company, to make the line of work and command much more bearable.

Research and development is an essential preliminary step before introducing a new product or service in a given market.

In this aspect, the analyzers make a great contribution detailing the characteristics of the product or service offered by its competitors and, based on this analysis, develop a stronger and stronger product in the market.

Market analysis is another great destination for the data obtained, most of the data obtained comes from the internet, these analyzes include the review of publications, websites, blogs, news and an endless amount of information that can be used to know the profile of the potential consumer and based on that knowledge adapt the product to the any possible demand.

To compare prices, the data obtained with a web scraper are frequently used to know the price variations of competing products to make adjustments to the company’s product or service to compete with some comparative advantage that can be directly related to prices.

These are the main reasons why extracting data and analyzing it quickly and efficiently represents an important accumulation of advantages for companies, from this reality part of the growing interest in the development and usefulness of web tracking as a tool in the decision-making process.

The popularity of this resource is more than justified, and it is understood why the interest of entrepreneurs and entrepreneurs in web scraping, there can be no doubt that this is the best option for anyone who requires it.